tl;dr: Version 1.0 of

Obnam, my

snapshotting, de-duplicating, encrypting backup program is released.

See the end of this announcement for the details.

Where we see the hero in his formative years; parental influence

From the very beginning, my computing life has involved backups.

In 1984, when I was 14,

my father was an independent

telecommunications consultant, which meant he needed a personal computer

for writing reports. He bought a

Luxor ABC-802,

a Swedish computer with a Z80 microprocessor and two floppy drives.

My father also taught me how to use it. When I needed to

save files, he gave me not one, but two floppies, and explained

that I should store my files one one, and then copy them to the

other one every now and then.

Later on, over the years, I've made backups from a hard disk

(30 megabytes!) to

a stack of floppies, to a tape drive installed into

a floppy interface (400 megabytes!), to a DAT drive, and various other media.

It was always a bit tedious.

The start of the quest; lengthy justification for NIH

In 2004, I decided to do a full backup, by burning a copy of all my

files onto CD-R disks. It took me most of the day. Afterwards, I sat

admiring the large stack of disks, and realized that I would not ever

do that again. I'm too lazy for that. That I had done it once was an

aberration in the space-time continuum.

Switching to DVD-Rs instead CD-Rs would reduce to the number of disks to

burn, but not enough: it would still take a stack of them.

I needed something much better.

I had a little experience with tape drives, and that was enough to convince

me that I didn't want them. Tape drives are expensive hardware,

and the tapes also cost money. If the drive goes bad, you have to get

a compatible one, or all your backups are toast. The price per gigabyte

was coming down fast for hard drives, and it was clear that they were

about to be very competitive with tapes for price.

I looked for backup programs that I could use for disk based backups.

rsync, of course, was the obvious choice, but there were others.

I ended up doing what many geeks do: I wrote my own wrapper around

rsync. There's hundred, possibly thousands, of such wrappers around

the Internet.

I also got the idea that doing a startup to provide online backup

space would be a really cool thing. However, I didn't really do

anything about that until 2007. More on that later.

The

rsync wrapper script I wrote used hardlinked directory trees

to provide a backup history, though not in the smart way that

backuppc does it.

The hardlinks were wonderful, because they were

cheap, and provided de-duplication. They were also quite cumbersome,

when I needed to move my backups to a new disk the first time. It

turned out that a lot of tools deal very badly with directory trees

with large numbers of hardlinks.

I also decided I wanted encrypted backups. This led me to find

duplicity, which is a nice program

that does encrypted backups, but I had issues with some of its

limitations. To fix those limitations, I would have had to re-design

and possibly re-implement the entire program. The biggest limitation

was that it treated backups as full backup, plus a sequence of

incremental backups, which were deltas against the previous backup.

Delta based incrementals make sense for tape drives. You run a full

backup once, then incremental deltas for every day. When enough time

has passed since the full backup, you do a new full backup, and then

future incrementals are based on that. Repeat forever.

I decided that this makes no sense for disk based backups. If I already

have backed up a file, there's no point in making me backup it again,

since it's already there on the same hard disk. It makes even less

sense for online backups, since doing a new full backup would require

me to transmit all the data all over again, even though it's already

on the server.

The first battle

I could not find a program that did what I wanted to do, and like

every good

NIHolic,

I started writing my own.

After various aborted attempts, I started for real in 2006. Here is

the first commit message:

revno: 1

committer: Lars Wirzenius <liw@iki.fi>

branch nick: wibbr

timestamp: Wed 2006-09-06 18:35:52 +0300

message:

Initial commit.

wibbr was the placeholder name for Obnam until we came up with

something better. We was myself and Richard Braakman, who was going

to be doing the backup startup with me. We eventually founded the

company near the end of 2006, and started doing business in 2007.

However, we did not do very much business, and ran out of money in

September 2007. We ended the backup startup experiment.

That's when I took a job with Canonical, and Obnam became a hobby

project of mine: I still wanted a good backup tool.

In September 2007, Obnam was working, but it was not very good.

For example, it was quite slow and wasteful of backup space.

That version of Obnam used deltas, based on the

rsync algorithm, to

backup only changes. It did not require the user to do full and

incremental backups manually, but essentially created an endless

sequence of incrementals. It was possible to remove any generation,

and Obnam would manage the deltas as necessary, keeping the ones

needed for the remaining generations, and removing the rest.

Obnam made it look as if each generation was independent of each other.

The wasteful part was the way in which metadata about files was

stored: each generation stored the full list of filenames and their

permissions and other inode fields. This turned out to be bigger

than my daily delta.

The lost years; getting lost in the forest

For the next two years, I did a little work on Obnam, but I did not

make progress very fast. I changed the way metadata was stored, for

example, but I picked another bad way of doing it: the new way was

essentially building a tree of directory and file nodes, and any

unchanged subtrees were shared between generations. This reduced the

space overhead per generation, but made it quite slow to look up

the metadata for any one file.

The final battle; finding cows in the forest

In 2009 I decided to leave Canonical and after that, my Obnam hobby

picked up in speed again. Below is a table of the number of commits

per year, from the very first commit (

bzr log -n0

awk '/timestamp:/ print $3 ' sed 's/-.*//' uniq -c

awk ' print $2, $1 ' tac):

2006 466

2007 353

2008 402

2009 467

2010 616

2011 790

2012 282

During most of 2010 and 2011 I was unemployed, and happily hacking

Obnam, while moving to another country twice. I don't recommend that

as a way to hack on hobby projects, but it worked for me.

After Canonical, I decided to tackle the way Obnam stores data from

a new angle. Richard told me about the copy-on-write (or COW) B-trees that

btrfs uses, originally designed by Ohad Rodeh

(see

his paper

for details),

and I started reading about that. It turned out that

they're pretty ideal for backups: each B-tree stores data about

one generation. To start a new generation, you clone the previous

generation's B-tree, and make any modifications you need.

I implemented the B-tree library myself, in Python.

I wanted something that

was flexible about how and where I stored data, which the btrfs

implementation did not seem to give me. (Also, I worship at the

altar of NIH.)

With the B-trees, doing file deltas from the previous generation

no longer made any sense. I realized that it was, in any case, a

better idea to store file data in chunks, and re-use chunks in

different generations as needed. This makes it much easier to

manage changes to files: with deltas, you need to keep a long chain

of deltas and apply many deltas to reconstruct a particular version.

With lists of chunks, you just get the chunks you need.

The spin-off franchise; lost in a maze of dependencies, all alike

In the process of developing Obnam, I have split off a number of

helper programs and libraries:

- genbackupdata

generates reproducible test data for backups

- seivot

runs benchmarks on backup software (although only Obnam for now)

- cliapp

is a Python framework for command line applications

- cmdtest

runs black box tests for Unix command line applications

- summain

makes diff-able file manifests (

md5sum on steroids),

useful for verifying that files are restored correctly

- tracing

allows run-time selectable debug log messages that is really

fast during normal production runs when messages are not printed

I have found it convenient to keep these split off, since I've been

able to use them in other projects as well. However, it turns out that

those installing Obnam don't like this: it would probably make sense to

have a fat release with Obnam and all dependencies, but I haven't bothered

to do that yet.

The blurb; readers advised about blatant marketing

The strong points of Obnam are, I think:

- Snapshot backups, similar to btrfs snapshot subvolumes.

Every generation looks like a complete snapshot,

so you don't need to care about full versus incremental backups, or

rotate real or virtual tapes.

The generations share data as much as possible,

so only changes are backed up each time.

- Data de-duplication, across files, and backup generations. If the

backup repository already contains a particular chunk of data, it will

be re-used, even if it was in another file in an older backup

generation. This way, you don't need to worry about moving around large

files, or modifying them.

- Encrypted backups, using GnuPG.

Backups may be stored on local hard disks (e.g., USB drives), any

locally mounted network file shares (NFS, SMB, almost anything with

remotely Posix-like semantics), or on any SFTP server you have access to.

What's not so strong is backing up online over SFTP, particularly with

long round trip times to the server, or many small files to back up.

That performance is Obnam's weakest part. I hope to fix that in the future,

but I don't want to delay 1.0 for it.

The big news; readers sighing in relief

I am now ready to release version 1.0 of Obnam. Finally. It's been

a long project, much longer than I expected, and much longer than

was really sensible. However, it's ready now. It's not bug free, and

it's not as fast as I would like, but it's time to declare it ready

for general use. If nothing else, this will get more people to use

it, and they'll find the remaining problems faster than I can do on

my own.

I have packaged Obnam for Debian, and it is in

unstable, and will

hopefully get into

wheezy before the Debian freeze. I provide

packages built for

squeeze on my own repository,

see the

download page.

The changes in the 1.0 release compared to the previous one:

- Fixed bug in finding duplicate files during a backup generation.

Thanks to Saint Germain for reporting the problem.

- Changed version number to 1.0.

The future; not including winning lottery numbers

I expect to get a flurry of bug reports in the near future as new people

try Obnam. It will take a bit of effort dealing with that. Help is, of

course, welcome!

After that, I expect to be mainly working on Obnam performance for the

foreseeable future. There may also be a FUSE filesystem interface for

restoring from backups, and a continous backup version of Obnam. Plus

other features, too.

I make no promises about how fast new features

and optimizations will happen: Obnam is a hobby project for me, and I

work on it only in my free time. Also, I have a bunch of things that

are on hold until I get Obnam into shape, and I may decide to do one

of those things before the next big Obnam push.

Where; the trail of an errant hacker

I've developed Obnam in a number of physical locations, and I thought

it might be interesting to list them:

Espoo, Helsinki, Vantaa, Kotka, Raahe, Oulu, Tampere, Cambridge, Boston,

Plymouth, London, Los Angeles, Auckland, Wellington, Christchurch,

Portland, New York, Edinburgh, Manchester, San Giorgio di Piano.

I've also hacked on Obnam in trains, on planes, and once on a ship,

but only for a few minutes on the ship before I got seasick.

Thank you; sincerely

- Richard Braakman, for helping me with ideas, feedback, and some

code optimizations, and for doing the startup with me. Even though

he has provided little code, he's Obnam's most significant contributor

so far.

- Chris Cormack, for helping to build

Obnam for Ubuntu. I no longer use Ubuntu at all, so it's a big help to

not have to worry about building and testing packages for it.

- Daniel Silverstone, for spending a

Saturday with me hacking Obnam, and rewriting the way repository file

filters work (compression, encryption), thus making them not suck.

- Tapani Tarvainen for running Obnam for

serious amounts of real data, and for being patient while I fixed things.

- Soile Mottisenkangas for believing in me, and

helping me overcome periods of despair.

- Everyone else who has tried Obnam and reported bugs or provided any

other feedback. I apologize for not listing everyone.

SEE ALSO

A

A  After seeing some book descriptions recently on planet debian,

let me add some short recommendation, too.

Almost everyone has heard about Gulliver's Travels already,

so usually only very cursory. For example: did you know the book

describes 4 journeys and not only the travel to Lilliput?

Given how influential the book has been, that is even more suprising.

Words like "endian" or "yahoo" originate from it.

My favorite is the third travel, though, especially the acadamy of Lagado,

from which I want to share two gems:

"

His lordship added, 'That he would not, by any further particulars, prevent the pleasure I

should certainly take in viewing the grand academy, whither he was resolved I should go.' He

only desired me to observe a ruined building, upon the side of a mountain about three miles

distant, of which he gave me this account: 'That he had a very convenient mill within half a

mile of his house, turned by a current from a large river, and sufficient for his own family, as

well as a great number of his tenants; that about seven years ago, a club of those projectors

came to him with proposals to destroy this mill, and build another on the side of that mountain,

on the long ridge whereof a long canal must be cut, for a repository of water, to be conveyed up

by pipes and engines to supply the mill, because the wind and air upon a height agitated the

water, and thereby made it fitter for motion, and because the water, descending down a

declivity, would turn the mill with half the current of a river whose course is more upon a

level.' He said, 'that being then not very well with the court, and pressed by many of his

friends, he complied with the proposal; and after employing a hundred men for two years, the

work miscarried, the projectors went off, laying the blame entirely upon him, railing at him

ever since, and putting others upon the same experiment, with equal assurance of success, as

well as equal disappointment.'

"

"I went into another room, where the walls and ceiling were all hung

round with cobwebs, except a narrow passage for the artist to go in and out.

At my entrance, he called aloud to me, 'not to disturb his webs.' He

lamented 'the fatal mistake the world had been so long in, of using

silkworms, while we had such plenty of domestic insects who infinitely excelled

the former, because they understood how to weave, as well as spin.'

And he proposed further, 'that by employing spiders, the charge of dyeing

silks should be wholly saved;' whereof I was fully convinced, when he

showed me a vast number of flies most beautifully coloured, wherewith he fed

his spiders, assuring us 'that the webs would take a tincture from them; and as

he had them of all hues, he hoped to fit everybody s fancy, as soon as he

could find proper food for the flies, of certain gums, oils, and other

glutinous matter, to give a strength and consistence to the threads.'"

After seeing some book descriptions recently on planet debian,

let me add some short recommendation, too.

Almost everyone has heard about Gulliver's Travels already,

so usually only very cursory. For example: did you know the book

describes 4 journeys and not only the travel to Lilliput?

Given how influential the book has been, that is even more suprising.

Words like "endian" or "yahoo" originate from it.

My favorite is the third travel, though, especially the acadamy of Lagado,

from which I want to share two gems:

"

His lordship added, 'That he would not, by any further particulars, prevent the pleasure I

should certainly take in viewing the grand academy, whither he was resolved I should go.' He

only desired me to observe a ruined building, upon the side of a mountain about three miles

distant, of which he gave me this account: 'That he had a very convenient mill within half a

mile of his house, turned by a current from a large river, and sufficient for his own family, as

well as a great number of his tenants; that about seven years ago, a club of those projectors

came to him with proposals to destroy this mill, and build another on the side of that mountain,

on the long ridge whereof a long canal must be cut, for a repository of water, to be conveyed up

by pipes and engines to supply the mill, because the wind and air upon a height agitated the

water, and thereby made it fitter for motion, and because the water, descending down a

declivity, would turn the mill with half the current of a river whose course is more upon a

level.' He said, 'that being then not very well with the court, and pressed by many of his

friends, he complied with the proposal; and after employing a hundred men for two years, the

work miscarried, the projectors went off, laying the blame entirely upon him, railing at him

ever since, and putting others upon the same experiment, with equal assurance of success, as

well as equal disappointment.'

"

"I went into another room, where the walls and ceiling were all hung

round with cobwebs, except a narrow passage for the artist to go in and out.

At my entrance, he called aloud to me, 'not to disturb his webs.' He

lamented 'the fatal mistake the world had been so long in, of using

silkworms, while we had such plenty of domestic insects who infinitely excelled

the former, because they understood how to weave, as well as spin.'

And he proposed further, 'that by employing spiders, the charge of dyeing

silks should be wholly saved;' whereof I was fully convinced, when he

showed me a vast number of flies most beautifully coloured, wherewith he fed

his spiders, assuring us 'that the webs would take a tincture from them; and as

he had them of all hues, he hoped to fit everybody s fancy, as soon as he

could find proper food for the flies, of certain gums, oils, and other

glutinous matter, to give a strength and consistence to the threads.'"

Finally I've found time to look back at vacation in Rome and post some pictures. It has been great time there and I've really enjoyed that.

I would like to thank to Luigi Gangitano for showing us some interesting places in the city.

And now back to pictures:

Finally I've found time to look back at vacation in Rome and post some pictures. It has been great time there and I've really enjoyed that.

I would like to thank to Luigi Gangitano for showing us some interesting places in the city.

And now back to pictures:

tl;dr: Version 1.0 of

tl;dr: Version 1.0 of  Current status

Back in September,

I

Current status

Back in September,

I

I mentioned this briefly yesterday, but now I'll try to summarize the

story of a great surprise and a big moment for me.

I mentioned this briefly yesterday, but now I'll try to summarize the

story of a great surprise and a big moment for me.

Branches are marked with green rectangles, and tags with yellow arrows.

What we have here (expected given our configuration of the tool) are

branches (e.g.

Branches are marked with green rectangles, and tags with yellow arrows.

What we have here (expected given our configuration of the tool) are

branches (e.g.  Branches that were not merged using svn merge look like they were not

merged at all.

Branches that were not merged using svn merge look like they were not

merged at all.

Before being in SVN, the repository was stored in CVS. When it was imported

into SVN, no special attention was given to the commit author. Hence I

got credited for changes I did not write.

Before being in SVN, the repository was stored in CVS. When it was imported

into SVN, no special attention was given to the commit author. Hence I

got credited for changes I did not write.

The tool leaves all branches, including removed ones (with tag on their end)

so that you can decide what to do with them.

The tool leaves all branches, including removed ones (with tag on their end)

so that you can decide what to do with them.

The branch

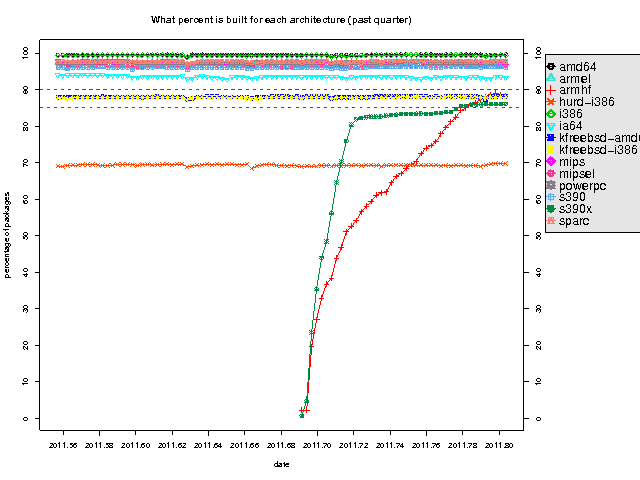

The branch  Quite a few things have happened recently in the Debian/ARM land

Quite a few things have happened recently in the Debian/ARM land A somewhat na ve young man, a kidnapping, a policeman's daughter, a night club singer, said policeman's partner being involved, a love triangle, a pervert and (probably because it's Lynch) a cut off ear. What more do you need ... ? Or, in other words, I absolutely regret not having seen

A somewhat na ve young man, a kidnapping, a policeman's daughter, a night club singer, said policeman's partner being involved, a love triangle, a pervert and (probably because it's Lynch) a cut off ear. What more do you need ... ? Or, in other words, I absolutely regret not having seen  Earlier today, I went to a local copy shop and had my diploma thesis printed. This afternoon, I will hand it in. The title is

Earlier today, I went to a local copy shop and had my diploma thesis printed. This afternoon, I will hand it in. The title is  Just wanted to share comments on some movies I've watched recently.

Just wanted to share comments on some movies I've watched recently.

Whew!

Is it karma or what? What makes me get involved in two horribly complex, two-week-long conferences, year after year? Of course, both (DebConf and EDUSOL) are great fun to be part of, and both have greatly influenced both my skills and interests.

Anyway, this is the fifth year we hold EDUSOL. Tomorrow we will bring the two weeks of activities to an end, hold the last two videoconferences, and finally declare it a done deal. I must anticipate the facts and call it a success, as it clearly will be recognized as such.

One of the most visible although we insist, not the core activities of the Encounter are the videoconferences. They are certainly among the most complex. And the videoconferences' value is greatly enhanced because, even if they are naturally a synchronous activity (it takes place at a given point in time), they live on after they are held: I do my best effort to publish them as soon as possible (less than one day off), and they are posted to their node, from where comments can continue. This was the reason, i.e., why we decided to move at the last minute tomorrow's conference: Due to a misunderstanding, Beatriz Busaniche (a good friend of ours and a very reknown Argentinian Free Software promotor, from

Whew!

Is it karma or what? What makes me get involved in two horribly complex, two-week-long conferences, year after year? Of course, both (DebConf and EDUSOL) are great fun to be part of, and both have greatly influenced both my skills and interests.

Anyway, this is the fifth year we hold EDUSOL. Tomorrow we will bring the two weeks of activities to an end, hold the last two videoconferences, and finally declare it a done deal. I must anticipate the facts and call it a success, as it clearly will be recognized as such.

One of the most visible although we insist, not the core activities of the Encounter are the videoconferences. They are certainly among the most complex. And the videoconferences' value is greatly enhanced because, even if they are naturally a synchronous activity (it takes place at a given point in time), they live on after they are held: I do my best effort to publish them as soon as possible (less than one day off), and they are posted to their node, from where comments can continue. This was the reason, i.e., why we decided to move at the last minute tomorrow's conference: Due to a misunderstanding, Beatriz Busaniche (a good friend of ours and a very reknown Argentinian Free Software promotor, from